- NEWSNEWS

- OPINIONOPINION

- WATCH

- BUSINESS

- FEATURES

- DONATE

- ABOUT USABOUT US

- PUBLICATIONSPUBLICATIONS

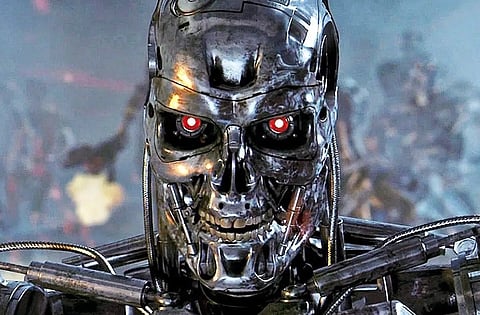

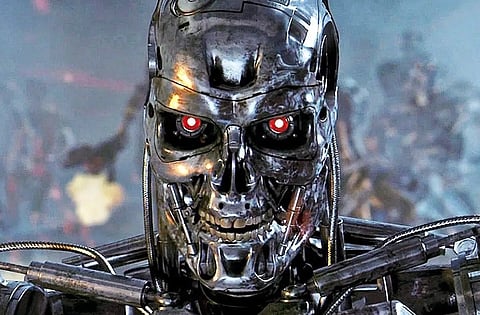

If you’re a fan of science fiction, chances are you have seen at least one of the Terminator movies, starring Arnold Schwarzenegger as a humanoid robot, out to wipe out humanity after Skynet goes active.

And while that is sci-fi, the truth is that the Pentagon has moved one step closer to artificial intelligence (AI) weapons that can kill people.

But the US is not the only one pursuing autonomous weapons.

Many countries are working on them and none of them — China, Russia, Iran, India or Pakistan — have signed a US-initiated pledge to use military AI responsibly.

The Pentagon's portfolio boasts more than 800 AI-related unclassified projects, many still in testing. Typically, machine-learning and neural networks are helping humans gain insights and create efficiencies.

Alongside humans, robotic swarms in the skies or on the ground could attack enemy positions from angles regular troops can’t. And now those arms might be closer to reality than ever before, Task & Purpose reported.

That is according to a new report from the Associated Press on the Pentagon’s Replicator program.

The program is meant to accelerate the Department of Defense’s use of cheap, small and easy to field drones run by artificial intelligence, the report said.

What is the goal? To have thousands of these weapons platforms by 2026, to counter the size of China’s fast-growing military.

The report notes officials and scientists agree the US military will soon have fully autonomous weapons, but want to keep a human in charge overseeing their use.

The question the military faces is how to decide if, or when, it should allow AI to use lethal force. And how does it determine friend from foe?

When I attended a military roundtable in Washington, DC in 2019, the US Army general being quizzed assured all the journalists in attendance, including me, that a human must always be in the kill-chain loop.

As for friend-or-foe decisions, the answer was pat: "We're working on it."

Regardless, governments are looking at ways to limit or guide just how AI can be used in war.

And better now, rather than later … on the eve of battle, as one US official said.

The New York Times reported on several of those concerns, including American and Chinese talks to limit how AI is used with regard to nuclear armaments to prevent a Skynet-type scenario.

However these talks or proposals are in heavy debate, with some parties saying no regulation is needed, while others propose extremely narrow limits, Task & Purpose reported.

So even as the world moves closer to these kinds of AI weapons, legal guidance for their use in war remains unclear on an international stage.

The US military already has extensively worked with robotic, remote controlled or outright AI-run weapons systems.

Soldiers currently are training on how to repel drone swarms using both high-tech counter-drone weaponry and more conventional, kinetic options.

At AUSA 2023 in Washington, DC, last month, I saw many of these systems, including some that were hand-held.

The problem is, the drone has to be in visible range — it must be seen, either by the shooter or a high-tech camera system able to ID a drone with just a few signature pixels.

These systems are mobile and can be installed on several platforms.

However, change is in the wind.

"Our military is going to have to change if we are going to continue to be superior to every other military on Earth," Gen. Mark Milley, the former chairman of the Joint Chiefs of Staff, told 60 Minutes correspondent Norah O'Donnell last month.

Milley said artificial intelligence will speed up and automate the so-called OODA loop — observe, orient, direct and act — which is the decision cycle meant to outwit an adversary.

More than two centuries ago, this strategy looked like Napoleon getting up in the middle of the night to issue orders before the British woke up for tea, Milley explained.

Soon, it will be computers automatically analyzing information to help make decisions of where to move troops and when.

"Artificial intelligence is extremely powerful," Milley said. "It's coming at us. I suspect it will be probably optimized for command and control of military operations within maybe ten to 15 years, max."

For now, the Department of Defense standard is for all decision-making to have a human OODA loop and department guidelines say fully autonomous weapons systems must "allow commanders and operators to exercise appropriate levels of human judgment over the use of force."

According to Deputy Secretary of Defense Kathleen Hicks, that standard will apply to Replicator, the Pentagon program mentioned earlier.

"Our policy for autonomy in weapon systems is clear and well-established: There is always a human responsible for the use of force. Full stop," Hicks told CBS News last month.

"Anything we do through this initiative, or any other, must and will adhere to that policy."

AI employed by the US military has piloted pint-sized surveillance drones in special operations forces’ missions and helped Ukraine in its war against Russia.

It tracks soldiers’ fitness, predicts when Air Force planes need maintenance and helps keep tabs on rivals in space.

While its funding is uncertain and details vague, Replicator is expected to accelerate hard decisions on what AI tech is mature and trustworthy enough to deploy, ABC News reported.

Just how powerful, is AI?

America’s Space Force is using an operational prototype called Machina, that keeps tabs autonomously on more than 40,000 objects in space, orchestrating thousands of data collections nightly with a global telescope network in the blink of an eye.

Machina's algorithms marshal telescope sensors. Computer vision and large language models tell them what objects to track. And AI choreographs drawing instantly on astrodynamics and physics datasets.

An amazing world of AI beckons.

But the big question remains — will AI make war more likely? Are we rapidly headed toward an Armageddon, out of our control?

"It could. It actually could," Milley said. "Artificial intelligence has a huge amount of legal, ethical and moral implications that we're just beginning to start to come to grips with."